- Sensors

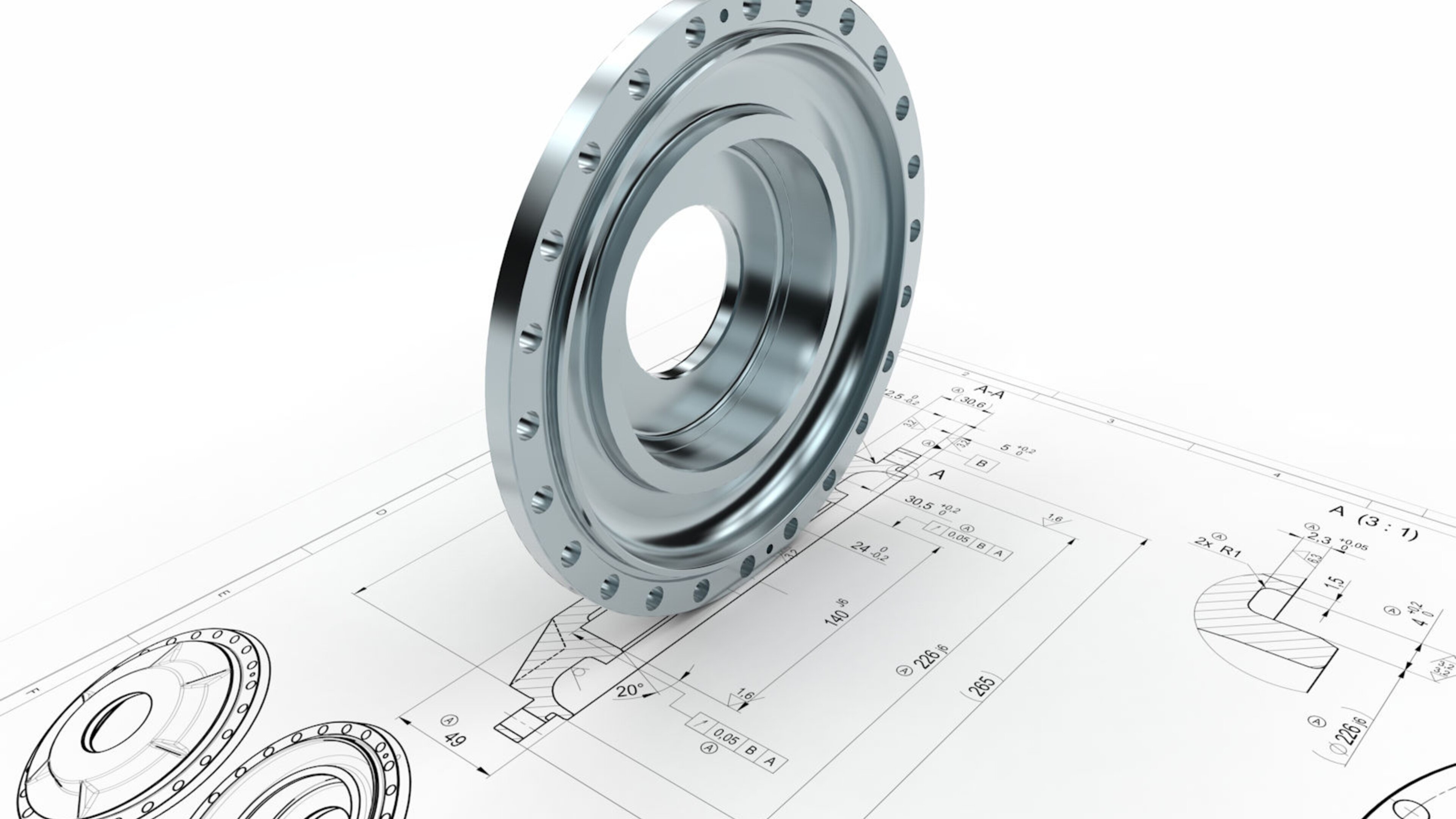

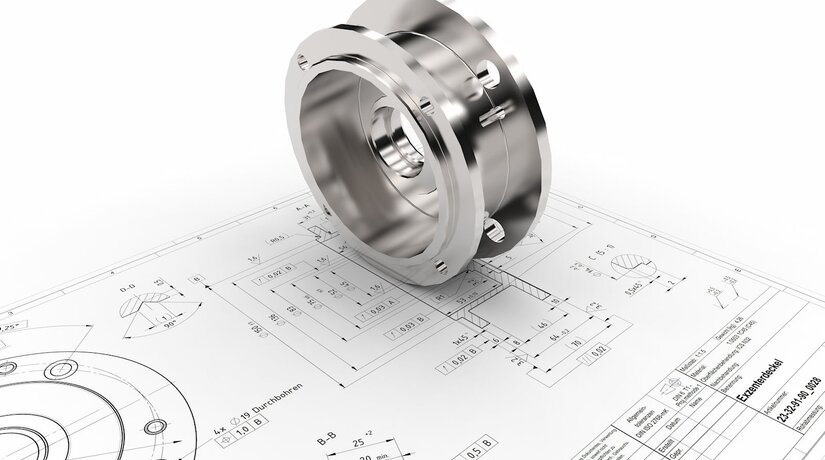

- Force Sensors

- Torque sensors

- Strain Sensors

- Displacement sensors

- Acceleration sensors

- Temperature sensors

- Sensor-Accessories

- K6D-Accessories

- clamping box

- Sensor-Configuration

- AS28 Accessories

- K3R-Accessories

- K3D-Accessories

- KM38-Accessories

- measuring case

- PCB Accessories

- KDs accessories

- KR Accessories

- KMz accessories

- END-OF-LIFE Sensors

- Electronics

- 1 Channel Measuring Amplifier

- Multi Channel Measuring Amplifier

- Multi Channel Measuring Amplifier With Interface

- Multi Channel Measuring Amplifier With Analog Outputs

- Wireless Measuring Amplifier

- END-OF-LIFE Electronics

- Electronics-Accessories

- Strain Gauges

- Foil Strain Gauges

- Semiconductor Gauges

- Straingage-Accessories

- Resistors

- Foils and Tapes

- Tool-Box

- Adhesives

- Surface-Cleaning

- Protective Coatings

- Soldering Terminals

- Wires and Stranded Wires

- Tools

- Solder and Flux

- END-OF-LIFE Strain gauge

- Basics

- Basics of Sensors

- Basics of Measuring Electronics

- Basics of Strain Gauges

- Bridge Circuit

- Variants of Bridge Circuits

- Stress Analysis

- Selection of Strain Gauges

- Basic Equipment

- Wiring Diagrams

- Shunt Calibration

- Crack Gauges

- Catalogs

- Literature

- Unit Conversion